Ph.D. Thesis

2025

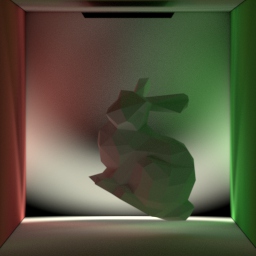

Robust Derivative Estimation with Walk on Stars

Zihan Yu, Rohan Sawhney, Bailey Miller, Lifan Wu, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2025), 44(6), December 2025

Monte Carlo methods based on the walk on spheres (WoS) algorithm offer a parallel, progressive, and output-sensitive approach for solving partial differential equations (PDEs) in complex geometric domains.

Building on this foundation, the walk on stars (WoSt) method generalizes WoS to support mixed Dirichlet, Neumann, and Robin boundary conditions.

However, accurately computing spatial derivatives of PDE solutions remains a major challenge: existing methods exhibit high variance and bias near the domain boundary, especially in Neumann-dominated problems.

We address this limitation with a new extension of WoSt specifically designed for derivative estimation.

Our method reformulates the boundary integral equation (BIE) for Poisson PDEs by directly leveraging the harmonicity of spatial derivatives.

Combined with a tailored random-walk sampling scheme and an unbiased early termination strategy, we achieve significantly improved accuracy in derivative estimates near the Neumann boundary.

We further demonstrate the effectiveness of our approach across various tasks, including recovering the non-unique solution to a pure Neumann problem with reduced bias and variance, constructing divergence-free vector fields, and optimizing parametrically defined boundaries under PDE constraints.

Image-Space Adaptive Sampling for Fast Inverse Rendering

Kai Yan, Cheng Zhang, Sébastien Speierer, Guangyan Cai, Yufeng Zhu, Zhao Dong, Shuang Zhao

ACM SIGGRAPH 2025 (conference-track full paper), August 2025

Inverse rendering is crucial for many scientific and engineering disciplines. Recent progress in differentiable rendering has led to efficient differentiation of the full image formation process with respect to scene parameters, enabling gradient-based optimization.

However, computational demands pose a significant challenge for differentiable rendering, particularly when rendering all pixels during inverse rendering from high-resolution/multi-view images. This computational cost leads to slow performance in each iteration of inverse rendering. Meanwhile, naively reducing the sampling budget by uniformly sampling pixels to render in each iteration can result in high gradient variance during inverse rendering, ultimately degrading overall performance.

Our goal is to accelerate inverse rendering by reducing the sampling budget without sacrificing overall performance. In this paper, we introduce a novel image-space adaptive sampling framework to accelerate inverse rendering by dynamically adjusting pixel sampling probabilities based on gradient variance and contribution to the loss function. Our approach efficiently handles high-resolution images and complex scenes, with faster convergence and improved performance compared to uniform sampling, making it a robust solution for efficient inverse rendering.

Unbiased Differential Visibility Using Fixed-Step Walk-on-Spherical-Caps And Closest Silhouettes

Lifan Wu, Nathan Morrical, Sai Bangaru, Rohan Sawhney, Shuang Zhao, Chris Wyman, Ravi Ramamoorthi, Aaron Lefohn

ACM Transactions on Graphics (SIGGRAPH 2025), 44(4), August 2025

Computing derivatives of path integrals under evolving scene geometry is a fundamental problem in physics-based differentiable rendering, which requires differentiating discontinuities in the visibility function. Warped-area reparameterization is a powerful technique to compute differential visibility, and key is construction of a velocity field that is continuous in the domain interior and agrees with defined velocities on boundaries. Robustly and efficiently constructing such fields remains challenging.

We present a novel velocity field construction for differential visibility. Inspired by recent Monte Carlo solvers for partial differential equations (PDEs), we formulate the velocity field via Laplace’s equation and solve it with a walk-on-spheres (WoS) algorithm. To improve efficiency, we introduce a fixed-step WoS that terminates random walks after a fixed step count, resulting in a continuous but non-harmonic velocity field still valid for warped-area reparameterization. Furthermore, to practically apply our method to complex 3D scenes, we propose an efficient cone query to find the closest silhouettes on a boundary. Our cone query finds the closest point under the geodesic distance on a unit sphere, and is analogous to the closest point query by WoS to compute Euclidean distance. As a result, our method generalizes WoS to perform random walks on spherical caps over the unit sphere. We demonstrate that this enables a more robust and efficient unbiased estimator for differential visibility.

Guiding-Based Importance Sampling for Walk on Stars

Tianyu Huang, Jingwang Ling, Shuang Zhao, Feng Xu

ACM SIGGRAPH 2025 (conference-track full paper), August 2025

Walk on stars (WoSt) has shown its power in being applied to Monte Carlo methods for solving partial differential equations, but the sampling techniques in WoSt are not satisfactory, leading to high variance. We propose a guiding-based importance sampling method to reduce the variance of WoSt. Drawing inspiration from path guiding in rendering, we approximate the directional distribution of the recursive term of WoSt using online-learned parametric mixture distributions, decoded by a lightweight neural field. This adaptive approach enables importance sampling the recursive term, which lacks shape information before computation. We introduce a reflection technique to represent guiding distributions at Neumann boundaries and incorporate multiple importance sampling with learnable selection probabilities to further reduce variance. We also present a practical GPU implementation of our method. Experiments show that our method effectively reduces variance compared to the original WoSt, given the same time or the same sample budget.

2024

Differentiating Variance for Variance-Aware Inverse Rendering

Kai Yan, Vincent Pegoraro, Marc Droske, Jiří Vorba, Shuang Zhao

ACM SIGGRAPH Asia 2024 (conference-track full paper), December 2024

Monte Carlo methods have been widely adopted in physics-based rendering. A key property of a Monte Carlo estimator is its variance, which dictates the convergence rate of the estimator. In this paper, we devise a mathematical formulation for derivatives of rendering variance with respect to not only scene parameters (e.g., surface roughness) but also sampling probabilities. Based on this formulation, we introduce unbiased Monte Carlo estimators for those derivatives. Our theory and algorithm enable variance-aware inverse rendering which alters a virtual scene and/or an estimator in an optimal way to offer a good balance between bias and variance. We evaluate our technique using several synthetic examples.

Markov-Chain Monte Carlo Sampling of Visibility Boundaries for Differentiable Rendering

Peiyu Xu, Sai Bangaru, Tzu-Mao Li, Shuang Zhao

ACM SIGGRAPH Asia 2024 (conference-track full paper), December 2024

Physics-based differentiable rendering requires estimating boundary path integrals emerging from the shift of discontinuities (e.g., visibility boundaries). Previously, although the mathematical formulation of boundary path integrals has been established, efficient and robust estimation of these integrals has remained challenging. Specifically, state-of-the-art boundary sampling methods all rely on primary-sample-space guiding precomputed using sophisticated data structures---whose performance tends to degrade for finely tessellated geometries.

In this paper, we address this problem by introducing a new Markov-Chain-Monte-Carlo (MCMC) method. At the core of our technique is a local perturbation step capable of efficiently exploring highly fragmented primary sample spaces via specifically designed jumping rules. We compare the performance of our technique with several state-of-the-art baselines using synthetic differentiable-rendering and inverse-rendering experiments.

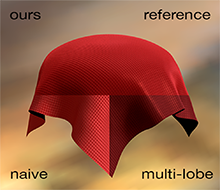

A Hierarchical Architecture for Neural Materials

Bowen Xue, Shuang Zhao, Henrik Wann Jensen, Zahra Montazeri

Computer Graphics Forum, 43(6), September 2024

Neural reflectance models are capable of reproducing the spatially‐varying appearance of many real‐world materials at different scales. Unfortunately, existing techniques such as NeuMIP have difficulties handling materials with strong shadowing effects or detailed specular highlights. In this paper, we introduce a neural appearance model that offers a new level of accuracy. Central to our model is an inception‐based core network structure that captures material appearances at multiple scales using parallel‐operating kernels and ensures multi‐stage features through specialized convolution layers. Furthermore, we encode the inputs into frequency space, introduce a gradient‐based loss, and employ it adaptive to the progress of the learning phase. We demonstrate the effectiveness of our method using a variety of synthetic and real examples.

NeRF As A Non-Distant Environment Emitter in Physics-Based Inverse Rendering

Jingwang Ling, Ruihan Yu, Feng Xu, Chun Du, Shuang Zhao

ACM SIGGRAPH 2024 (conference-track full paper), July 2024

Physics-based inverse rendering enables joint optimization of shape, material, and lighting based on captured 2D images. To ensure accurate reconstruction, using a light model that closely resembles the captured environment is essential. Although the widely adopted distant environmental lighting model is adequate in many cases, we demonstrate that its inability to capture spatially varying illumination can lead to inaccurate reconstructions in many real-world inverse rendering scenarios. To address this limitation, we incorporate NeRF as a non-distant environment emitter into the inverse rendering pipeline. Additionally, we introduce an emitter importance sampling technique for NeRF to reduce the rendering variance. Through comparisons on both real and synthetic datasets, our results demonstrate that our NeRF-based emitter offers a more precise representation of scene lighting, thereby improving the accuracy of inverse rendering.

Path-Space Differentiable Rendering of Implicit Surfaces

Siwei Zhou, Youngha Chang, Nobuhiko Mukai, Hiroaki Santo, Fumio Okura, Yasuyuki Matsushita, Shuang Zhao

ACM SIGGRAPH 2024 (conference-track full paper), July 2024

Physics-based differentiable rendering is a key ingredient for integrating forward rendering into probabilistic inference and machine learning pipelines. As a state-of-the-art formulation for differentiable rendering, differential path integrals have enabled the development of efficient Monte Carlo estimators for both interior and boundary integrals. Unfortunately, this formulation has been designed mostly for explicit geometries like polygonal meshes.

In this paper, we generalize the theory of differential path integrals to support implicit geometries like level sets and signed-distance functions (SDFs). In addition, we introduce new Monte Carlo estimators for efficiently sampling discontinuity boundaries that are also implicitly specified. We demonstrate the effectiveness of our theory and algorithms using several differentiable-rendering and inverse-rendering examples.

A Differential Monte Carlo Solver For the Poisson Equation

Zihan Yu, Lifan Wu, Zhiqian Zhou, Shuang Zhao

ACM SIGGRAPH 2024 (conference-track full paper), July 2024

The Poisson equation is an important partial differential equation (PDE) with numerous applications in physics, engineering, and computer graphics. Conventional solutions to the Poisson equation require discretizing the domain or its boundary, which can be very expensive for domains with detailed geometries. To overcome this challenge, a family of grid-free Monte Carlo solutions has recently been developed. By utilizing walk-on-sphere (WoS) processes, these techniques are capable of efficiently solving the Poisson equation over complex domains.

In this paper, we introduce a general technique that differentiates solutions to the Poisson equation with Dirichlet boundary conditions. Specifically, we devise a new boundary-integral formulation for the derivatives with respect to arbitrary parameters including shapes of the domain. Further, we develop an efficient walk-on-spheres technique based on our new formulation---including a new approach to estimate normal derivatives of the solution field. We demonstrate the effectiveness of our technique over baseline methods using several synthetic examples.

Estimating Uncertainty in Appearance Acquisition

Zhiqian Zhou, Cheng Zhang, Zhao Dong, Carl Marshall, Shuang Zhao

Eurographics Symposium on Rendering (EGSR), July 2024

The inference of material reflectance from physical observations (e.g., photographs) is usually under-constrained, causing point estimates to suffer from ambiguity and, thus, generalize poorly to novel configurations. Conventional methods address this problem by using dense observations or introducing priors.

In this paper, we tackle this problem from a different angle by introducing a method to quantify uncertainties. Based on a Bayesian formulation, our method can quantitatively analyze how under-constrained a material inference problem is (given the observations and priors), by sampling the entire posterior distribution of material parameters rather than optimizing a single point estimate as given by most inverse rendering methods. Further, we present a method to guide acquisition processes by recommending viewing/lighting configurations for making additional observations. We demonstrate the usefulness of our technique using several synthetic and one real example.

ReflectanceFusion: Diffusion-based text to SVBRDF Generation

Bowen Xue, Giuseppe Claudio Guarnera, Shuang Zhao, Zahra Montazeri

Eurographics Symposium on Rendering (EGSR), July 2024

We introduce ReflectanceFusion (Reflectance Diffusion), a new neural text-to-texture model capable of generating high-fidelity SVBRDF maps from textual descriptions. Our method leverages a tandem neural approach, consisting of two modules, to accurately model the distribution of spatially varying reflectance as described by text prompts. Initially, we employ a pre-trained stable diffusion 2 model to generate a latent representation that informs the overall shape of the material and serves as our backbone model. Then, our ReflectanceUNet enables fine-tuning control over the material's physical appearance and generates SVBRDF maps. ReflectanceUNet module is trained on an extensive dataset comprising approximately 200,000 synthetic spatially varying materials. Our generative SVBRDF diffusion model allows for the synthesis of multiple SVBRDF estimates from a single textual input, offering users the possibility to choose the output that best aligns with their requirements. We illustrate our method's versatility by generating SVBRDF maps from a range of textual descriptions, both specific and broad. Our ReflectanceUNet model can integrate optional physical parameters, such as roughness and specularity, enhancing customization. When the backbone module is fixed, the ReflectanceUNet module refines the material, allowing direct edits to its physical attributes. Comparative evaluations demonstrate that ReflectanceFusion achieves better accuracy than existing text-to-material models, such as Text2Mat, while also providing the benefits of editable and relightable SVBRDF maps.

A Hierarchical Architecture for Neural Materials

Bowen Xue, Shuang Zhao, Henrik Wann Jensen, Zahra Montazeri

Computer Graphics Forum, e15116, May 2024

Neural reflectance models are capable of reproducing the spatially-varying appearance of many real-world materials at different scales. Unfortunately, existing techniques such as NeuMIP have difficulties handling materials with strong shadowing effects or detailed specular highlights. In this paper, we introduce a neural appearance model that offers a new level of accuracy. Central to our model is an inception-based core network structure that captures material appearances at multiple scales using parallel-operating kernels and ensures multi-stage features through specialized convolution layers. Furthermore, we encode the inputs into frequency space, introduce a gradient-based loss, and employ it adaptive to the progress of the learning phase. We demonstrate the effectiveness of our method using a variety of synthetic and real examples.

2023

Warped-Area Reparameterization of Differential Path Integrals

Peiyu Xu, Sai Bangaru, Tzu-Mao Li, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2023), 42(6), December 2023

Best papers award

Physics-based differentiable rendering is becoming increasingly crucial for tasks in inverse rendering and machine learning pipelines. To address discontinuities caused by geometric boundaries and occlusion, two classes of methods have been proposed: 1) the edge-sampling methods that directly sample light paths at the scene discontinuity boundaries, which require nontrivial data structures and precomputation to select the edges, and 2) the reparameterization methods that avoid discontinuity sampling but are currently limited to hemispherical integrals and unidirectional path tracing.

We introduce a new mathematical formulation that enjoys the benefits of both classes of methods. Unlike previous reparameterization work that focused on hemispherical integral, we derive the reparameterization in the path space. As a result, to estimate derivatives using our formulation, we can apply advanced Monte Carlo rendering methods, such as bidirectional path tracing, while avoiding explicit sampling of discontinuity boundaries. We show differentiable rendering and inverse rendering results to demonstrate the effectiveness of our method.

Amortizing Samples in Physics-Based Inverse Rendering using ReSTIR

Yu-Chen Wang, Chris Wyman, Lifan Wu, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2023), 42(6), December 2023

Recently, great progress has been made in physics-based differentiable rendering.

Existing differentiable rendering techniques typically focus on static scenes, but during inverse rendering---a key application for differentiable rendering---the scene is updated dynamically by each gradient step.

In this paper, we take a first step to leverage temporal data in the context of inverse direct illumination.

By adopting reservoir-based spatiotemporal resampled importance resampling (ReSTIR), we introduce new Monte Carlo estimators for both interior and boundary components of differential direct illumination integrals.

We also integrate ReSTIR with antithetic sampling to further improve its effectiveness.

At equal frame time, our methods produce gradient estimates with up to 100X lower relative error than baseline methods.

Additionally, we propose an inverse-rendering pipeline that incorporates these estimators and provides reconstructions with up to 20X lower error.

PSDR-Room: Single Photo to Scene using Differentiable Rendering

Kai Yan, Fujun Luan, Miloš Hašan, Thibault Groueix, Valentin Deschaintre, Shuang Zhao

ACM SIGGRAPH Asia 2023 (conference-track full paper), December 2023

A 3D digital scene contains many components: lights, materials and geometries, interacting to reach the desired appearance. Staging such a scene is time-consuming and requires both artistic and technical skills. In this work, we propose PSDR-Room, a system allowing to optimize lighting as well as the pose and materials of individual objects to match a target image of a room scene, with minimal user input. To this end, we leverage a recent path-space differentiable rendering approach that provides unbiased gradients of the rendering with respect to geometry, lighting, and procedural materials, allowing us to optimize all of these components using gradient descent to visually match the input photo appearance. We use recent single-image scene understanding methods to initialize the optimization and search for appropriate 3D models and materials. We evaluate our method on real photographs of indoor scenes and demonstrate the editability of the resulting scene components.

Neural-PBIR Reconstruction of Shape, Material, and Illumination

Cheng Sun, Guangyan Cai, Zhengqin Li, Kai Yan, Cheng Zhang, Carl Marshall, Jia-Bin Huang, Shuang Zhao, Zhao Dong

International Conference on Computer Vision (ICCV), October 2023

Reconstructing the shape and spatially varying surface appearances of a physical-world object as well as its surrounding illumination based on 2D images (e.g., photographs) of the object has been a long-standing problem in computer vision and graphics. In this paper, we introduce a robust object reconstruction pipeline combining neural based object reconstruction and physics-based inverse rendering (PBIR). Specifically, our pipeline firstly leverages a neural stage to produce high-quality but potentially imperfect predictions of object shape, reflectance, and illumination. Then, in the later stage, initialized by the neural predictions, we perform PBIR to refine the initial results and obtain the final high-quality reconstruction. Experimental results demonstrate our pipeline significantly outperforms existing reconstruction methods quality-wise and performance-wise.

SAM-RL: Sensing-Aware Model-Based Reinforcement Learning via Differentiable Physics-Based Simulation and Rendering

Jun Lv, Yunhai Feng, Cheng Zhang, Shuang Zhao, Lin Shao, Cewu Lu

Robotics: Science and Systems (RSS), July 2023

Best system paper finalist

Efficient Path-Space Differentiable Volume Rendering With Respect To Shapes

Zihan Yu, Cheng Zhang, Olivier Maury, Christophe Hery, Zhao Dong, Shuang Zhao

Computer Graphics Forum (Eurographics Symposium on Rendering), 42(4), July 2023

Differentiable rendering of translucent objects with respect to their shapes has been a long-standing problem. State-of-the- art methods require detecting object silhouettes or specifying change rates inside translucent objects—both of which can be expensive for translucent objects with complex shapes.

In this paper, we address this problem for translucent objects with no refractive or reflective boundaries. By reparameterizing interior components of differential path integrals, our new formulation does not require change rates to be specified in the interior of objects. Further, we introduce new Monte Carlo estimators based on this formulation that do not require explicit detection of object silhouettes.

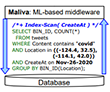

Maliva: Using Machine Learning to Rewrite Visualization Queries Under Time Constraints

Qiushi Bai, Sadeem Alsudais, Chen Li, Shuang Zhao

International Conference on Extending Database Technology (EDBT), March 2023

We consider data-visualization systems where a middleware layer translates a frontend request to a SQL query to a backend database to compute visual results. We study the problem of answering a visualization request within a limited time due to the responsiveness requirement. We explore optimization options of rewriting an original query by adding hints and/or doing approximations so that the total time is within the time constraint. We develop a novel middleware solution called Maliva based on machine learning (ML) techniques. It applies the Markov Decision Process (MDP) model to decide how to rewrite queries and uses instances to train an agent to make a sequence of decisions judiciously for an online request. We give a full specification of the technique, including how to construct an MDP model, how to train an agent, and how to use approximation rewriting options. Our experiments on both real and synthetic datasets show that Maliva performs significantly better than a baseline solution that does not do any rewriting, in terms of both the probability of serving requests interactively and query execution time.

2022

Efficient Differentiation of Pixel Reconstruction Filters for Path-Space Differentiable Rendering

Zihan Yu, Cheng Zhang, Derek Nowrouzezahra, Zhao Dong, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2022), 41(6), December 2022

Pixel reconstruction filters play an important role in physics-based rendering and have been thoroughly studied. In physics-based differentiable rendering, however, the proper treatment of pixel filters remains largely under-explored. We present a new technique to efficiently differentiate pixel reconstruction filters based on the path-space formulation. Specifically, we formulate the pixel boundary integral that models discontinuities in pixel filters and introduce new antithetic sampling methods that support differentiable path sampling methods, such as adjoint particle tracing and bidirectional path tracing. We demonstrate both the need and efficacy of antithetic sampling when estimating this integral, and we evaluate its effectiveness across several differentiable- and inverse-rendering settings.

GSViz: Progressive Visualization of Geospatial Influences in Social Networks

Sadeem Alsudais, Qiushi Bai, Shuang Zhao, Chen Li

ACM SIGSPATIAL 2022, November 2022

With the growing popularity of social networks, it becomes increasingly important to analyze binary relationships between entities such as users or online posts. These relationships are particularly useful when the entities are location-based. The spatial dimension provides more insights on influences in social networks across different regions. In this paper, we study how to visualize geospatial relationships on a large social network for queries with ad hoc conditions (such as keyword search) that retrieves a subnetwork. We focus on a main efficiency challenge to support responsive visualization, and present a middleware-based system called GSViz that progressively answers requests and computes results incrementally. GSViz minimizes visual clutter by clustering the spatial points while considering the edges among them. It further minimizes the clutter by incrementally bundling the edges, i.e., grouping similar edges in a bundle to increase the screen's white space. The system allows user interactions such as zooming and panning. We conducted an extensive computational study on real data sets and a user study, which evaluated the system's performance and the quality of its visualization results.

Efficient Estimation of Boundary Integrals for Path-Space Differentiable Rendering

Kai Yan, Christoph Lassner, Brian Budge, Zhao Dong, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH 2022), 41(4), July 2022

Boundary integrals are unique to physics-based differentiable rendering and crucial for differentiating with respect to object geometry. Under the differential path integral framework—which has enabled the development of sophisticated differentiable rendering algorithms—the boundary components are themselves path integrals. Previously, although the mathematical formulation of boundary path integrals have been established, efficient estimation of these integrals remains challenging.

In this paper, we introduce a new technique to efficiently estimate boundary path integrals. A key component of our technique is a primary-sample-space guiding step for importance sampling of boundary segments. Additionally, we show multiple importance sampling can be used to combine multiple guided samplings. Lastly, we introduce an optional edge sorting step to further improve the runtime performance.

We evaluate the effectiveness of our method using several differentiable-rendering and inverse-rendering examples and provide comparisons with existing methods for reconstruction as well as gradient quality.

Physics-Based Inverse Rendering using Combined Implicit and Explicit Geometries

Guangyan Cai, Kai Yan, Zhao Dong, Ioannis Gkioulekas, Shuang Zhao

Computer Graphics Forum (Eurographics Symposium on Rendering), 41(4), July 2022

Mathematically representing the shape of an object is a key ingredient for solving inverse rendering problems. Explicit representations like meshes are efficient to render in a differentiable fashion but have difficulties handling topology changes. Implicit representations like signed-distance functions, on the other hand, offer better support of topology changes but are much more difficult to use for physics-based differentiable rendering. We introduce a new physics-based inverse rendering pipeline that uses both implicit and explicit representations. Our technique enjoys the benefit of both representations by supporting both topology changes and differentiable rendering of complex effects such as environmental illumination, soft shadows, and interreflection. We demonstrate the effectiveness of our technique using several synthetic and real examples.

Rainbow: A Rendering-Aware Index for High-Quality Spatial Scatterplots with Result-Size Budgets

Qiushi Bai, Sadeem Alsudais, Chen Li, Shuang Zhao

Eurographics Symposium on Parallel Graphics and Visualization, June 2022

We study the problem of computing a spatial scatterplot on a large dataset for arbitrary zooming/panning queries. We introduce a general framework called “Rainbow” that generates a high-quality scatterplot for a given result-size budget. Rainbow augments a spatial index with judiciously selected representative points offline. To answer a query, Rainbow traverses the index top-down and selects representative points with a good quality until the result-size budget is reached. We experimentally demonstrate the effectiveness of Rainbow.

A Compact Representation of Measured BRDFs Using Neural Processes

Chuankun Zheng, Ruzhang Zheng, Rui Wang, Shuang Zhao, Hujun Bao

ACM Transactions on Graphics (Presented at SIGGRAPH 2022), 41(2), April 2022

In this article, we introduce a compact representation for measured BRDFs by leveraging Neural Processes (NPs). Unlike prior methods that express those BRDFs as discrete high-dimensional matrices or tensors, our technique considers measured BRDFs as continuous functions and works in corresponding function spaces. Specifically, provided the evaluations of a set of BRDFs, such as ones in MERL and EPFL datasets, our method learns a low-dimensional latent space as well as a few neural networks to encode and decode these measured BRDFs or new BRDFs into and from this space in a non-linear fashion. Leveraging this latent space and the flexibility offered by the NPs formulation, our encoded BRDFs are highly compact and offer a level of accuracy better than prior methods. We demonstrate the practical usefulness of our approach via two important applications, BRDF compression and editing. Additionally, we design two alternative post-trained decoders to, respectively, achieve better compression ratio for individual BRDFs and enable importance sampling of BRDFs.

2021

Beyond Mie Theory: Systematic Computation of Bulk Scattering Parameters based on

Microphysical Wave Optics

Yu Guo, Adrian Jarabo, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2021), 40(6), December 2021

Light scattering in participating media and translucent materials is typically modeled using the radiative transfer theory. Under the assumption of independent scattering between particles, it utilizes several bulk scattering parameters to statistically characterize light-matter interactions at the macroscale. To calculate these parameters based on microscale material properties, the Lorenz-Mie theory has been considered the gold standard.

In this paper, we present a generalized framework capable of systematically and rigorously computing bulk scattering parameters beyond the far-field assumption of Lorenz-Mie theory. Our technique accounts for microscale wave-optics effects such as diffraction and interference as well as interactions between nearby particles.

Our framework is general, can be plugged in any renderer supporting Lorenz-Mie scattering, and allows arbitrary packing rates and particles correlation; we demonstrate this generality by computing bulk scattering parameters for a wide range of materials, including anisotropic and correlated media.

Differentiable Time-Gated Rendering

Lifan Wu*, Guangyan Cai*, Ravi Ramamoorthi, Shuang Zhao

(* equal contribution)

ACM Transactions on Graphics (SIGGRAPH Asia 2021), 40(6), December 2021

The continued advancements of time-of-flight imaging devices have enabled new imaging pipelines with numerous applications. Consequently, several forward rendering techniques capable of accurately and efficiently simulating these devices have been introduced. However, general-purpose differentiable rendering techniques that estimate derivatives of time-of-flight images are still lacking. In this paper, we introduce a new theory of differentiable time-gated rendering that enjoys the generality of differentiating with respect to arbitrary scene parameters. Our theory also allows the design of advanced Monte Carlo estimators capable of handling cameras with near-delta or discontinuous time gates.

We validate our theory by comparing derivatives generated with our technique and finite differences. Further, we demonstrate the usefulness of our technique using a few proof-of-concept inverse-rendering examples that simulate several time-of-flight imaging scenarios.

Antithetic Sampling for Monte Carlo Differentiable Rendering

Cheng Zhang, Zhao Dong, Michael Doggett, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH 2021), 40(4), August 2021

Stochastic sampling of light transport paths is key to Monte Carlo forward rendering, and previous studies have led to mature techniques capable of drawing high-contribution light paths in complex scenes. These sampling techniques have also been applied to differentiable rendering.

In this paper, we demonstrate that path sampling techniques developed for forward rendering can become inefficient for differentiable rendering of glossy materials---especially when estimating derivatives with respect to global scene geometries. To address this problem, we introduce antithetic sampling of BSDFs and light-transport paths, allowing significantly faster convergence and can be easily integrated into existing differentiable rendering pipelines. We validate our method by comparing our derivative estimates to those generated with existing unbiased techniques. Further, we demonstrate the effectiveness of our technique by providing equal-quality and equal-time comparisons with existing sampling methods.

Path-Space Differentiable Rendering of Participating Media

Cheng Zhang*, Zihan Yu*, Shuang Zhao

(* equal contribution)

ACM Transactions on Graphics (SIGGRAPH 2021), 40(4), August 2021

Physics-based differentiable rendering---which focuses on estimating derivatives of radiometric detector responses with respect to arbitrary scene parameters---has a diverse array of applications from solving analysis-by-synthesis problems to training machine-learning pipelines incorporating forward-rendering processes. Unfortunately, existing general-purpose differentiable rendering techniques lack either the generality to handle volumetric light transport or the flexibility to devise Monte Carlo estimators capable of handling complex geometries and light transport effects.

In this paper, we bridge this gap by showing how generalized path integrals can be differentiated with respect to arbitrary scene parameters. Specifically, we establish the mathematical formulation of generalized differential path integrals that capture both interfacial and volumetric light transport. Our formulation allows the development of advanced differentiable rendering algorithms capable of efficiently handling challenging geometric discontinuities and light transport phenomena such as volumetric caustics.

We validate our method by comparing our derivative estimates to those generated using the finite differences. Further, to demonstrate the effectiveness of our technique, we compare both differentiable rendering and inverse rendering performance with state-of-the-art methods.

Unified Shape and SVBRDF Recovery using Differentiable Monte Carlo Rendering

Fujun Luan, Shuang Zhao, Kavita Bala, Zhao Dong

Computer Graphics Forum (Eurographics Symposium on Rendering), 40(4), July 2021

Reconstructing the shape and appearance of real-world objects using measured 2D images has been a long-standing inverse rendering problem. In this paper, we introduce a new analysis-by-synthesis technique capable of producing high-quality reconstructions through robust coarse-to-fine optimization and physics-based differentiable rendering.

Unlike most previous methods that handle geometry and reflectance largely separately, our method unifies the optimization of both by leveraging image gradients with respect to both object reflectance and geometry. To obtain physically accurate gradient estimates, we develop a new GPU-based Monte Carlo differentiable renderer leveraging recent advances in differentiable rendering theory to offer unbiased gradients while enjoying better performance than existing tools like PyTorch3D and redner. To further improve robustness, we utilize several shape and material priors as well as a coarse-to-fine optimization strategy to reconstruct geometry. Using both synthetic and real input images, we demonstrate that our technique can produce reconstructions with higher quality than previous methods.

Practical Ply-Based Appearance Modeling for Knitted Fabrics

Zahra Montazeri, Søren B. Gammelmark, Henrik Wann Jensen, Shuang Zhao

Eurographics Symposium on Rendering, July 2021

Modeling the geometry and the appearance of knitted fabrics has been challenging due to their complex geometries and interactions with light. Previous surface-based models have difficulties capturing fine-grained knit geometries; Micro-appearance models, on the other hands, typically store individual cloth fibers explicitly and are expensive to be generated and rendered. Further, neither of the models offers the flexibility to accurately capture both the reflection and the transmission of light simultaneously.

In this paper, we introduce an efficient technique to generate knit models with user-specified knitting patterns. Our model stores individual knit plies with fiber-level detailed depicted using normal and tangent mapping. We evaluate our generated models using a wide array of knitting patterns. Further, we compare qualitatively renderings to our models to photos of real samples.

Hybrid Monte Carlo Estimators for Multilayer Transport Problems

Shuang Zhao and Jerome Spanier

Journal of Computational Physics (JCP), 431, April 2021

This paper introduces a new family of hybrid estimators aimed at controlling the efficiency of Monte Carlo computations in particle transport problems. In this context, efficiency is usually measured by the figure of merit (FOM) given by the inverse product of the estimator variance \( \mathrm{Var}[\xi] \) and the run time \( T \): \( \mathrm{FOM} := (\mathrm{Var}[\xi], T)^{-1} \). Previously, we developed a new family of transport-constrained unbiased radiance estimators (T-CURE) that generalize the conventional collision and track length estimators and provide 1--2 orders of magnitude additional variance reduction. However, these gains in variance reduction are partly offset by increases in overhead time, lowering their computational efficiency. Here we show that combining T-CURE estimation with conventional terminal estimation within each individual biography can moderate the efficiency of the resulting "hybrid" estimator without introducing bias in the computation. This is achieved by treating only the refractive interface crossings with the extended next event estimator, and all others by standard terminal estimators. This is because when there are index-mismatched interfaces between the collision location and the detector, the T-CURE computation rapidly becomes intractable due to the large number of refractions and reflections that can arise. We illustrate the gains in efficiency by comparing our hybrid strategy with more conventional estimation methods in a series of multi-layer numerical examples.

2020

A Bayesian Inference Framework for Procedural Material Parameter Estimation

Yu Guo, Miloš Hašan, Lingqi Yan, Shuang Zhao

Computer Graphics Forum (Pacific Graphics), 39(7), December 2020

Procedural material models have been gaining traction in many applications thanks to their flexibility, compactness, and easy editability. In this paper, we explore the inverse rendering problem of procedural material parameter estimation from photographs using a Bayesian framework. We use summary functions for comparing unregistered images of a material under known lighting, and we explore both hand-designed and neural summary functions. In addition to estimating the parameters by optimization, we introduce a Bayesian inference approach using Hamiltonian Monte Carlo to sample the space of plausible material parameters, providing additional insight into the structure of the solution space. To demonstrate the effectiveness of our techniques, we fit procedural models of a range of materials---wall plaster, leather, wood, anisotropic brushed metals and metallic paints---to both synthetic and real target images.

MaterialGAN: Reflectance Capture using a Generative SVBRDF Model

Yu Guo, Cameron Smith, Miloš Hašan, Kalyan Sunkavalli, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2020), 39(6), November 2020

We address the problem of reconstructing spatially-varying BRDFs from a small set of image measurements. This is a fundamentally under-constrained problem, and previous work has relied on using various regularization priors or on capturing many images to produce plausible results. In this work, we present MaterialGAN, a deep generative convolutional network based on StyleGAN2, trained to synthesize realistic SVBRDF parameter maps. We show that MaterialGAN can be used as a powerful material prior in an inverse rendering framework: we optimize in its latent representation to generate material maps that match the appearance of the captured images when rendered. We demonstrate this framework on the task of reconstructing SVBRDFs from images captured under flash illumination using a hand-held mobile phone. Our method succeeds in producing plausible material maps that accurately reproduce the target images, and outperforms previous state-of-the-art material capture methods in evaluations on both synthetic and real data. Furthermore, our GAN-based latent space allows for high-level semantic material editing operations such as generating material variations and material morphing.

A Practical Ply-Based Appearance Model of Woven Fabrics

Zahra Montazeri, Søren B. Gammelmark, Shuang Zhao, Henrik Wann Jensen

ACM Transactions on Graphics (SIGGRAPH Asia 2020), 39(6), November 2020

Simulating the appearance of woven fabrics is challenging due to the complex interplay of lighting between the constituent yarns and fibers. Conventional surface-based models lack the fidelity and details for producing realistic close-up renderings. Micro-appearance models, on the other hand, can produce highly detailed renderings by depicting fabrics fiber-by-fiber, but become expensive when handling large pieces of clothing. Further, neither surface-based nor micro-appearance model has not been shown in practice to match measurements of complex anisotropic reflection and transmission simultaneously.

In this paper, we introduce a practical appearance model for woven fabrics. We model the structure of a fabric at the ply level and simulate the local appearance of fibers making up each ply. Our model accounts for both reflection and transmission of light and is capable of matching physical measurements better than prior methods including fiber based techniques. Compared to existing micro-appearance models, our model is light-weight and scales to large pieces of clothing.

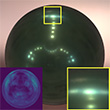

Inverse-Rendering-Based Analysis of the Fine Illumination Effects in Salvator Mundi

Marco (Zhanhang) Liang, Shuang Zhao, Michael T. Goodrich

Leonardo (SIGGRAPH 2020 Art Paper), 53(4), August 2020

The painting Salvator Mundi is attributed to Leonardo da Vinci and depicts Jesus holding a transparent orb. The authors study the optical accuracy of the fine illumination effects in this painting using inverse rendering. Their experimental results provide plausible explanations for the strange glow inside the orb, the anomalies on the orb and the mysterious three white spots, supporting the optical accuracy of the orb’s rendering down to its fine-grain details.

Path-Space Differentiable Rendering

Cheng Zhang, Bailey Miller, Kai Yan, Ioannis Gkioulekas, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH 2020), 39(4), July 2020

Physics-based differentiable rendering, the estimation of derivatives of radiometric measures with respect to arbitrary scene parameters, has a diverse array of applications from solving analysis-by-synthesis problems to training machine learning pipelines incorporating forward rendering processes. Unfortunately, general-purpose differentiable rendering remains challenging due to the lack of efficient estimators as well as the need to identify and handle complex discontinuities such as visibility boundaries. In this paper, we show how path integrals can be differentiated with respect to arbitrary differentiable changes of a scene. We provide a detailed theoretical analysis of this process and establish new differentiable rendering formulations based on the resulting differential path integrals. Our path-space differentiable rendering formulation allows the design of new Monte Carlo estimators that offer significantly better efficiency than state-of-the-art methods in handling complex geometric discontinuities and light transport phenomena such as caustics.

We validate our method by comparing our derivative estimates to those generated using the finite-difference method. To demonstrate the effectiveness of our technique, we compare inverse-rendering performance with a few state-of-the-art differentiable rendering methods.

Langevin Monte Carlo Rendering with Gradient-Based Adaptation

Fujun Luan, Shuang Zhao, Kavita Bala, Ioannis Gkioulekas

ACM Transactions on Graphics (SIGGRAPH 2020), 39(4), July 2020

We introduce a suite of Langevin Monte Carlo algorithms for efficient photorealistic rendering of scenes with complex light transport effects, such as caustics, interreflections, and occlusions. Our algorithms operate in pri- mary sample space, and use the Metropolis-adjusted Langevin algorithm (MALA) to generate new samples. Drawing inspiration from state-of-the-art stochastic gradient descent procedures, we combine MALA with adaptive preconditioning and momentum schemes that re-use previously-computed first-order gradients, either in an online or in a cache-driven fashion. This combination allows MALA to adapt to the local geometry of the primary sample space, without the computational overhead associated with previous Hessian-based adaptation algorithms. We use the theory of controlled Markov chain Monte Carlo to ensure that these combinations remain ergodic, and are therefore suitable for unbiased Monte Carlo rendering. Through extensive experiments, we show that our algorithms, MALA with online and cache-driven adaptation, can successfully handle complex light transport in a large variety of scenes, leading to improved performance (on average more than 3X variance reduction at equal time, and 7X for motion blur) compared to state-of-the-art Markov chain Monte Carlo rendering algorithms.

Analytic Spherical Harmonic Gradients for Real-Time Rendering with Many Polygonal Area Lights

Lifan Wu, Guangyan Cai, Shuang Zhao, Ravi Ramamoorthi

ACM Transactions on Graphics (SIGGRAPH 2020), 39(4), July 2020

Recent work has developed analytic formulae for spherical harmonic (SH) coefficients from uniform polygonal lights, enabling near-field area lights to be included in Precomputed Radiance Transfer (PRT) systems, and in offline rendering. However, the method is inefficient since coefficients need to be recomputed at each vertex or shading point, for each light, even though the SH coefficients vary smoothly in space. The complexity scales linearly with the number of lights, making many-light rendering difficult. In this paper, we develop a novel analytic formula for the spatial gradients of the spherical harmonic coefficients for uniform polygonal area lights. The result is a significant generalization, involving the Reynolds transport theorem to reduce the problem to a boundary integral for which we derive a new analytic formula, showing how to reduce a key term to an earlier recurrence for SH coefficients. The implementation requires only minor additions to existing code for SH coefficients. The results also hold implications for recent efforts on differentiable rendering. We show that SH gradients enable very sparse spatial sampling, followed by accurate Hermite interpolation. This enables scaling PRT to hundreds of area lights with minimal overhead and real-time frame rates. Moreover, the SH gradient formula is a new mathematical result that potentially enables many other graphics applications.

Effect of Geometric Sharpness on Translucent Material Perception

Bei Xiao, Shuang Zhao, Ioannis Gkioulekas, Wenyan Bi, Kavita Bala

Journal of Vision, 20(7), July 2020

When judging optical properties of a translucent object, humans often look at sharp geometric features such as edges and thin parts. Analysis of the physics of light transport shows that these sharp geometries are necessary for scientific imaging systems to be able to accurately measure the underlying material optical properties. In this paper, we examine whether human perception of translucency is likewise affected by the presence of sharp geometry, by confounding our perceptual inferences about an object’s optical properties. We employ physically accurate simulations to create visual stimuli of translucent materials with varying shapes and optical properties under different illuminations. We then use these stimuli in psychophysical experiments, where human observers are asked to match an image of a target object by adjusting the material parameters of a match object with different geometric sharpness, lighting, and 3D geometry. We find that the level of geometric sharpness significantly affects perceived translucency by observers. These findings generalize across a few illumination conditions and object shapes. Our results suggest that the perceived translucency of an object depends on both the underlying material‘s optical parameters and 3D shape of the object. We also find that models based on image contrast cannot fully predict the perceptual results.

Multi-Scale Appearance Modeling of Granular Materials with Continuously Varying Grain Properties

Cheng Zhang and Shuang Zhao

Eurographics Symposium on Rendering (EGSR), June 2020

Many real-world materials such as sand, snow, salt, and rice are comprised of large collections of grains. Previously, multiscale rendering of granular materials requires precomputing light transport per grain and has difficulty in handling materials with continuously varying grain properties. Further, existing methods usually describe granular materials by explicitly storing individual grains, which becomes hugely data-intensive to describe large objects, or replicating small blocks of grains, which lacks the flexibility to describe materials with grains distributed in nonuniform manners.

We introduce a new method to render granular materials with continuously varying grain optical properties efficiently. This is achieved using a novel symbolic and differentiable simulation of light transport during precomputation. Additionally, we introduce a new representation to depict large-scale granular materials with complex grain distributions. After constructing a template tile as preprocessing, we adapt it at render time to generate large quantities of grains with user-specified distributions. We demonstrate the effectiveness of our techniques using a few examples with a variety of grain properties and distributions.

Towards Learning-Based Inverse Subsurface Scattering

Chengqian Che, Fujun Luan, Shuang Zhao, Kavita Bala, Ioannis Gkioulekas

IEEE International Conference on Computational Photography (ICCP), April 2020

Given images of translucent objects, of unknown shape and lighting, we aim to use learning to infer the optical parameters controlling subsurface scattering of light inside the objects. We introduce a new architecture, the inverse transport network (ITN), that aims to improve generalization of an encoder network to unseen scenes, by connecting it with a physically-accurate, differentiable Monte Carlo renderer capable of estimating image derivatives with respect to scattering material parameters. During training, this combination forces the encoder network to predict parameters that not only match groundtruth values, but also reproduce input images. During testing, the encoder network is used alone, without the renderer, to predict material parameters from a single input image. Drawing insights from the physics of radiative transfer, we additionally use material parameterizations that help reduce estimation errors due to ambiguities in the scattering parameter space. Finally, we augment the training loss with pixelwise weight maps that emphasize the parts of the image most informative about the underlying scattering parameters. We demonstrate that this combination allows neural networks to generalize to scenes with completely unseen geometries and illuminations better than traditional networks, with 38.06% reduced parameter error on average.

2019

On the Optical Accuracy of the Salvator Mundi

Marco (Zhanhang) Liang, Michael T. Goodrich, Shuang Zhao

Technical report (arXiv:1912.03416), December 2019

A debate in the scientific literature has arisen regarding whether the orb depicted in Salvator Mundi, which has been attributed by some experts to Leonardo da Vinci, was rendered in a optically faithful manner or not. Some hypothesize that it was solid crystal while others hypothesize that it was hollow, with competing explanations for its apparent lack of background distortion and its three white spots. In this paper, we study the optical accuracy of the Salvator Mundi using physically based rendering, a sophisticated computer graphics tool that produces optically accurate images by simulating light transport in virtual scenes. We created a virtual model of the composition centered on the translucent orb in the subject's hand. By synthesizing images under configurations that vary illuminations and orb material properties, we tested whether it is optically possible to produce an image that renders the orb similarly to how it appears in the painting. Our experiments show that an optically accurate rendering qualitatively matching that of the painting is indeed possible using materials, light sources, and scientific knowledge available to Leonardo da Vinci circa 1500. We additionally tested alternative theories regarding the composition of the orb, such as that it was a solid calcite ball, which provide empirical evidence that such alternatives are unlikely to produce images similar to the painting, and that the orb is instead hollow.

A Differential Theory of Radiative Transfer

Cheng Zhang, Lifan Wu, Changxi Zheng, Ioannis Gkioulekas, Ravi Ramamoorthi, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2019), 38(6), November 2019

Physics-based differentiable rendering is the task of estimating the derivatives of radiometric measures with respect to scene parameters. The ability to compute these derivatives is necessary for enabling gradient-based optimization in a diverse array of applications: from solving analysis-by-synthesis problems to training machine learning pipelines incorporating forward rendering processes. Unfortunately, physics-based differentiable rendering remains challenging, due to the complex and typically nonlinear relation between pixel intensities and scene parameters.

We introduce a differential theory of radiative transfer, which shows how individual components of the radiative transfer equation (RTE) can be differentiated with respect to arbitrary differentiable changes of a scene. Our theory encompasses the same generality as the standard RTE, allowing differentiation while accurately handling a large range of light transport phenomena such as volumetric absorption and scattering, anisotropic phase functions, and heterogeneity. To numerically estimate the derivatives given by our theory, we introduce an unbiased Monte Carlo estimator supporting arbitrary surface and volumetric configurations. Our technique differentiates path contributions symbolically and uses additional boundary integrals to capture geometric discontinuities such as visibility changes.

We validate our method by comparing our derivative estimations to those generated using the finite-difference method. Furthermore, we use a few synthetic examples inspired by real-world applications in inverse rendering, non-line-of-sight (NLOS) and biomedical imaging, and design, to demonstrate the practical usefulness of our technique.

Multi-Scale Hybrid Micro-Appearance Modeling and Realtime Rendering of Thin Fabrics

Chao Xu, Rui Wang, Shuang Zhao, Hujun Bao

IEEE Transactions on Visualization and Computer Graphics, 27 (4), April 2021

(Date of Publication: October 30, 2019)

Micro-appearance models offer state-of-the-art quality for cloth renderings. Unfortunately, they usually rely on 3D volumes or fiber meshes that are not only data-intensive but also expensive to render. Traditional surface-based models, on the other hand, are light-weight and fast to render but normally lack the fidelity and details important for design and prototyping applications. We introduce a multi-scale, hybrid model to bridge this gap for thin fabrics. Our model enjoys both the compactness and speedy rendering offered by traditional surface-based models and the rich details provided by the micro-appearance models. Further, we propose a new algorithm to convert state-of-the-art micro-appearance models into our representation while qualitatively preserving the detailed appearance. We demonstrate the effectiveness of our technique by integrating it into a real-time rendering system.

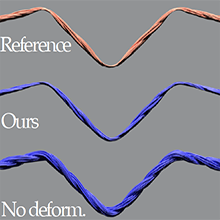

Mechanics-Aware Modeling of Cloth Appearance

Zahra Montazeri, Chang Xiao, Yun (Raymond) Fei, Changxi Zheng, Shuang Zhao

IEEE Transactions on Visualization and Computer Graphics, 27 (1), January 2021

(Date of Publication: August 26, 2019)

Micro-appearance models have brought unprecedented fidelity and details to cloth rendering. Yet, these models neglect fabric mechanics: when a piece of cloth interacts with the environment, its yarn and fiber arrangement usually changes in response to external contact and tension forces. Since subtle changes of a fabric's microstructures can greatly affect its macroscopic appearance, mechanics-driven appearance variation of fabrics has been a phenomenon that remains to be captured. We introduce a mechanics-aware model that adapts the microstructures of cloth yarns in a physics-based manner. Our technique works on two distinct physical scales: using physics-based simulations of individual yarns, we capture the rearrangement of yarn-level structures in response to external forces. These yarn structures are further enriched to obtain appearance-driving fiber-level details. The cross-scale enrichment is made practical through a new parameter fitting algorithm for simulation, an augmented procedural yarn model coupled with a custom-design regression neural network. We train the network using a dataset generated by joint simulations at both the yarn and the fiber levels. Through several examples, we demonstrate that our model is capable of synthesizing photorealistic cloth appearance in a mechanically plausible way.

Accurate Appearance Preserving Prefiltering for Rendering Displacement-Mapped Surfaces

Lifan Wu, Shuang Zhao, Ling-Qi Yan, Ravi Ramamoorthi

ACM Transactions on Graphics (SIGGRAPH 2019), 38(4), July 2019

Prefiltering the reflectance of a displacement-mapped surface while preserving its overall appearance is challenging, as smoothing a displacement map causes complex changes of illumination effects such as shadowing-masking and interreflection. In this paper, we introduce a new method that prefilters displacement maps and BRDFs jointly and constructs SVBRDFs at reduced resolutions. These SVBRDFs preserve the appearance of the input models by capturing both shadowing-masking and interreflection effects. To expressour appearance-preserving SVBRDFs efficiently, we leverage a new representation that involves spatially varying NDFs and a novel scaling function that accurately captures micro-scale changes of shadowing, masking, and interreflection effects. Further, we show that the 6D scaling function can be factorized into a 2D function of surface location and a 4D function of direction. By exploiting the smoothness of these functions, we develop a simple and efficient factorization method that does not require computing the full scaling function. The resulting functions can be represented at low resolutions (e.g., 4^2 for the spatial function and 15^4 for the angular function),leading to minimal additional storage. Our method generalizes well to differ-ent types of geometries beyond Gaussian surfaces. Models prefiltered using our approach at different scales can be combined to form mipmaps, allowing accurate and anti-aliased level-of-detail (LoD) rendering.

2018

Position-Free Monte Carlo Simulation for Arbitrary Layered BSDFs

Yu Guo, Miloš Hašan, Shuang Zhao

ACM Transactions on Graphics (SIGGRAPH Asia 2018), 37(6), November 2018

Real-world materials are often layered: metallic paints, biological tissues, and many more. Variation in the interface and volumetric scattering properties of the layers leads to a rich diversity of material appearances from anisotropic highlights to complex textures and relief patterns. However, simulating light-layer interactions is a challenging problem. Past analytical or numerical solutions either introduce several approximations and limitations, or rely on expensive operations on discretized BSDFs, preventing the ability to freely vary the layer properties spatially. We introduce a new unbiased layered BSDF model based on Monte Carlo simulation, whose only assumption is the layer assumption itself. Our novel position-free path formulation is fundamentally more powerful at constructing light transport paths than generic light transport algorithms applied to the special case of flat layers, since it is based on a product of solid angle instead of area measures, so does not contain the high-variance geometry terms needed in the standard formulation. We introduce two techniques for sampling the position-free path integral, a forward path tracer with next-event estimation and a full bidirectional estimator. We show a number of examples, featuring multiple layers with surface and volumetric scattering, surface and phase function anisotropy, and spatial variation in all parameters.

Inverse Diffusion Curves using Shape Optimization

Shuang Zhao, Frédo Durand, Changxi Zheng

IEEE Transactions on Visualization and Computer Graphics, 24(7), July 2018

The inverse diffusion curve problem focuses on automatic creation of diffusion curve images that resemble user provided color fields. This problem is challenging since the 1D curves have a nonlinear and global impact on resulting color fields via a partial differential equation (PDE). We introduce a new approach complementary to previous methods by optimizing curve geometry. In particular, we propose a novel iterative algorithm based on the theory of shape derivatives. The resulting diffusion curves are clean and well-shaped, and the final image closely approximates the input. Our method provides a user-controlled parameter to regularize curve complexity, and generalizes to handle input color fields represented in a variety of formats.

Does Geometric Sharpness Affect Perception of Translucent Material?

Bei Xiao, Wenyan Bi, Shuang Zhao, Ioannis Gkioulekas, Kavita Bala

Vision Science Society Annual Meeting, May 2018

When judging material properties of a translucent object, we often look at sharp geometric features such as edges. Image analysis shows edges of translucent objects exhibit distinctive light scattering profiles. Around the edges of translucent objects, there is often a rapid change of material thickness, which provides valuable information for recovering material properties. It was found that perception of 3D shape is different between opaque and translucent objects. Here, we examine whether geometry affects the perception of translucent material perception.

The images used in the experiment are computer-generated using Mitsuba physically based renderer. The shape of an object is described as 2D height fields (in which each pixel contains the amount of extrusion from the object surface to the base plane). We varied both material properties and 3D shapes of the stimuli: for the former, we used materials with varying optical densities (used by the radiative transfer model) so that the object would have different levels of ground-truth translucency; for the latter, we applied different amounts of Gaussian blur to the underlying height fields. Seven observers finished a paired-comparison experiment where they viewed a pair of images that had different ground-truth translucency and blur levels. They were asked to judge which object appeared to be more translucent. We also included control conditions where the objects in both images have the same blur levels.

We found that when there was no difference in the level of blurring between the images, observers could discriminate material properties of the two objects well (mean accuracy = 81%). However, when the two objects differ in the blur level, all observers started to make more mistakes (mean accuracy = 71%). We conclude that observers’ sensitivity to translucent appearance is affected by the sharpness of the 3D geometry of the object, thus suggesting 3D shape affects material perception for translucency.

2017

Fiber-Level On-the-Fly Procedural Textiles

Fujun Luan, Shuang Zhao, Kavita Bala

Computer Graphics Forum (Eurographics Symposium on Rendering), 36(4), July 2017

Procedural textile models are compact, easy to edit, and can achieve state-of-the-art realism with fiber-level details. However, these complex models generally need to be fully instantiated (aka. realized) into 3D volumes or fiber meshes and stored in memory, We introduce a novel realization-minimizing technique that enables physically based rendering of procedural textiles, without the need of full model realizations. The key ingredients of our technique are new data structures and search algorithms that look up regular and flyaway fibers on the fly, efficiently and consistently. Our technique works with compact fiber-level procedural yarn models in their exact form with no approximation imposed. In practice, our method can render very large models that are practically unrenderable using existing methods, while using considerably less memory (60–200X less) and achieving good performance.

Real-Time Linear BRDF MIP-Mapping

Chao Xu, Rui Wang, Shuang Zhao, Hujun Bao

Computer Graphics Forum (Eurographics Symposium on Rendering), 36(4), July 2017

We present a new technique to jointly MIP-map BRDF and normal maps. Starting with generating an instant BRDF map, our technique builds its MIP-mapped versions based on a highly efficient algorithm that interpolates von Mises-Fisher (vMF) distributions. In our BRDF MIP-maps, each pixel stores a vMF mixture approximating the average of all BRDF lobes from the finest level. Our method is capable of jointly MIP-mapping BRDF and normal maps, even with high-frequency variations, at real-time while preserving high-quality reflectance details. Further, it is very fast, easy to implement, and requires no precomputation.

2016

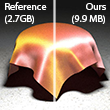

Downsampling Scattering Parameters for Rendering Anisotropic Media

Shuang Zhao*, Lifan Wu*, Frédo Durand, Ravi Ramamoorthi

(* Joint first authors)

ACM Transactions on Graphics (SIGGRAPH Asia 2016), 35(6), November 2016

Volumetric micro-appearance models have provided remarkably high-quality renderings, but are highly data intensive and usually require tens of gigabytes in storage. When an object is viewed from a distance, the highest level of detail offered by these models is usually unnecessary, but traditional linear downsampling weakens the object's intrinsic shadowing structures and can yield poor accuracy. We introduce a joint optimization of single-scattering albedos and phase functions to accurately downsample heterogeneous and anisotropic media. Our method is built upon scaled phase functions, a new representation combining abledos and (standard) phase functions. We also show that modularity can be exploited to greatly reduce the amortized optimization overhead by allowing multiple synthesized models to share one set of downsampled parameters. Our optimized parameters generalize well to novel lighting and viewing configurations, and the resulting data sets offer several orders of magnitude storage savings.

Towards Real-Time Monte Carlo for Biomedicine

Shuang Zhao, Rong Kong, Jerome Spanier

International Conference on Monte Carlo and Quasi-Monte Carlo Method in Scientific Computing, August 2016

Monte Carlo methods provide the "gold standard" computational technique for solving biomedical problems but their use is hindered by the slow convergence of the sample means. An exponential increase in the convergence rate can be obtained by adaptively modifying the sampling and weighting strategy employed. However, if the radiance is represented globally by a truncated expansion of basis functions, or locally by a region-wise constant or low degree polynomial, a bias is introduced by the truncation and/or the number of subregions. The sheer number of expansion coefficients or geometric subdivisions created by the biased representation then partly or entirely offsets the geometric acceleration of the convergence rate. As well, the (unknown amount of) bias is unacceptable for a gold standard numerical method. We introduce a new unbiased estimator of the solution of radiative transfer equation (RTE) that constrains the radiance to obey the transport equation. We provide numerical evidence of the superiority of this Transport-Constrained Unbiased Radiance Estimator (T-CURE) in various transport problems and indicate its promise for general heterogeneous problems.

Fitting Procedural Yarn Models for Realistic Cloth Rendering

Shuang Zhao, Fujun Luan, Kavita Bala

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4), July 2016

Fabrics play a significant role in many applications in design, prototyping, and entertainment. Recent fiber-based models capture the rich visual appearance of fabrics, but are too onerous to design and edit. Yarn-based procedural models are powerful and convenient, but too regular and not realistic enough in appearance. In this paper, we introduce an automatic fitting approach to create high-quality procedural yarn models of fabrics with fiber-level details. We fit CT data to procedural models to automatically recover a full range of parameters, and augment the models with a measurement-based model of flyaway fibers. We validate our fabric models against CT measurements and photographs, and demonstrate the utility of this approach for fabric modeling and editing.

2015

Matching Real Fabrics with Micro-Appearance Models

Pramook Khungurn, Daniel Schroeder, Shuang Zhao, Kavita Bala, Steve Marschner

ACM Transactions on Graphics, 35(1), December 2015

Micro-appearance models explicitly model the interaction of light with microgeometry at the fiber scale to produce realistic appearance. To effectively match them to real fabrics, we introduce a new appearance matching framework to determine their parameters. Given a micro-appearance model and photographs of the fabric under many different lighting conditions, we optimize for parameters that best match the photographs using a method based on calculating derivatives during rendering. This highly applicable framework, we believe, is a useful research tool because it simplifies development and testing of new models.

Using the framework, we systematically compare several types of micro-appearance models. We acquired computed microtomography (micro CT) scans of several fabrics, photographed them under many viewing/illumination conditions, and matched several appearance models to this data. We compare a new fiber-based light scattering model to the previously used microflake model. We also compare representing cloth microgeometry using volumes derived directly from the micro CT data to using explicit fibers reconstructed from the volumes. From our comparisons we make the following conclusions: (1) given a fiber-based scattering model, volume- and fiber-based microgeometry representations are capable of very similar quality, and (2) using a fiber-specific scattering model is crucial to good results as it achieves considerably higher accuracy than prior work.

2014

Modeling and Rendering Fabrics at Micron-Resolution

Shuang Zhao

Department of Computer Science, Cornell University, August 2014

Building Volumetric Appearance Models of Fabric using Micro CT Imaging

Shuang Zhao, Wenzel Jakob, Steve Marschner, Kavita Bala

Communications of the ACM (Research Highlights), 57(11), November 2014

Cloth is essential to our everyday lives; consequently, visualizing and rendering cloth has been an important area of research in graphics for decades. One important aspect contributing to the rich appearance of cloth is its complex 3D structure. Volumetric algorithms that model this 3D structure can correctly simulate the interaction of light with cloth to produce highly realistic images of cloth. But creating volumetric models of cloth is difficult: writing specialized procedures for each type of material is onerous, and requires significant programmer effort and intuition. Further, the resulting models look unrealistically “perfect” because they lack visually important features like naturally occurring irregularities.

This paper proposes a new approach to acquiring volume models, based on density data from X-ray computed tomography (CT) scans and appearance data from photographs under uncontrolled illumination. To model a material, a CT scan is made, yielding a scalar density volume. This 3D data has micron resolution details about the structure of cloth but lacks all optical information. So we combine this density data with a reference photograph of the cloth sample to infer its optical properties. We show that this approach can easily produce volume appearance models with extreme detail, and at larger scales the distinctive textures and highlights of a range of very different fabrics such as satin and velvet emerge automatically—all based simply on having accurate mesoscale geometry.

High-Order Similarity Relations in Radiative Transfer

Shuang Zhao, Ravi Ramamoorthi, Kavita Bala

ACM Transactions on Graphics (SIGGRAPH 2014), 33(4), July 2014

Radiative transfer equations (RTEs) with different scattering parameters can lead to identical solution radiance fields. Similarity theory studies this effect by introducing a hierarchy of equivalence relations called "similarity relations". Unfortunately, given a set of scattering parameters, it remains unclear how to find altered ones satisfying these relations, significantly limiting the theory's practical value. This paper presents a complete exposition of similarity theory, which provides fundamental insights into the structure of the RTE's parameter space. To utilize the theory in its general high-order form, we introduce a new approach to solve for the altered parameters including the absorption and scattering coefficients as well as a fully tabulated phase function. We demonstrate the practical utility of our work using two applications: forward and inverse rendering of translucent media. Forward rendering is our main application, and we develop an algorithm exploiting similarity relations to offer "free" speedups for Monte Carlo rendering of optically dense and forward-scattering materials. For inverse rendering, we propose a proof-of-concept approach which warps the parameter space and greatly improves the efficiency of gradient descent algorithms. We believe similarity theory is important for simulating and acquiring volume-based appearance, and our approach has the potential to benefit a wide range of future applications in this area.

2013

Inverse Volume Rendering with Material Dictionaries

Ioannis Gkioulekas, Shuang Zhao, Kavita Bala, Todd Zickler, Anat Levin

ACM Transactions on Graphics (SIGGRAPH Asia 2013), 32(6), November 2013