1NVIDIA 2University of California, Irvine 3University of California, San Diego

The continued advancements of time-of-flight imaging devices have enabled new imaging pipelines with numerous applications. Consequently, several forward rendering techniques capable of accurately and efficiently simulating these devices have been introduced. However, general-purpose differentiable rendering techniques that estimate derivatives of time-of-flight images are still lacking. In this paper, we introduce a new theory of differentiable time-gated rendering that enjoys the generality of differentiating with respect to arbitrary scene parameters. Our theory also allows the design of advanced Monte Carlo estimators capable of handling cameras with near-delta or discontinuous time gates.

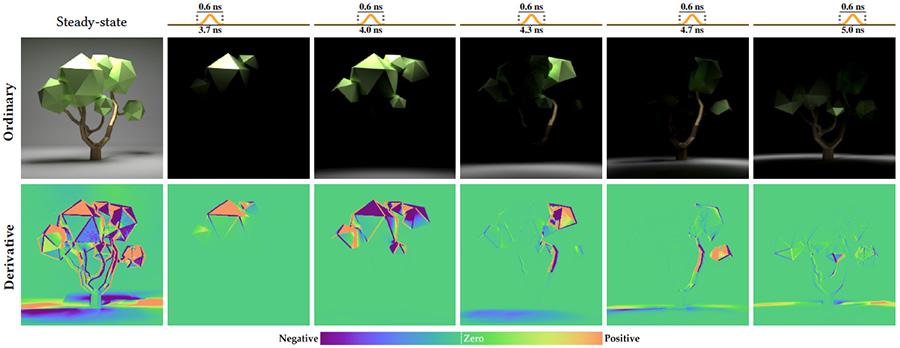

We validate our theory by comparing derivatives generated with our technique and finite differences. Further, we demonstrate the usefulness of our technique using a few proof-of-concept inverse-rendering examples that simulate several time-of-flight imaging scenarios.

- Paper: pdf (17 MB)

- Talk slides: pptx (22 MB)

- Supplemental material: html, zip (448 MB)

- Code: zip (8.5 MB)

@article{Wu:2021:DTGR,

title={Differentiable Time-Gated Rendering},

author={Wu, Lifan and Cai, Guangyan and Ramamoorthi, Ravi and Zhao, Shuang},

journal={ACM Trans. Graph.},

volume={40},

number={6},

year={2021},

pages={287:1--287:16}

}

We thank the anonymous reviewers for their comments and suggestions. This work was started while Lifan Wu and Guangyan Cai were students at UCSD, and the work was supported in part by NSF grant 1900927, an NVIDIA Fellowship, and the Ronald L. Graham Chair. We also acknowledge the recent award of NSF grant 2105806.